[HOME] -> [ENGINEERING PROJECTS] -> [WARFLYING DRONE]

Warflying Drone

What is “Warflying”?

Warflying is an offshoot of the common hacking practice of wardriving, which is an evolution of wardialing. Wardialing involved calling (dialing) random numbers in an attempt to find vulnarabilities. Wardriving modernized this concept by targetting wireless networks using more sophisticated technologies, such as single board computers (SBCs). Warflying seeks to further evolve this practice by combining drones and SBCs to increase the attack surface available to a hacker.

Required materials:

- Drone with 500g minimum cargo capacity

- Raspberry Pi or comparable SBC

- 2.4GHz/5.8GHz Antenna

- Computer

- Fasteners

Setup:

- Install Kismet on the Raspberry Pi or SBC.

- Install and configure the USB 2.4GHz/5.8GHz antenna on the SBC.

- Fasten your SBC onto the drone so that weight is evenly distributed laterally.

That’s it! Optionally, a PC can be configured to remotely connect to the SBC while flying, but this requires additional hardware, which may cause interference.

Kismet is a highly versatile, effective, and user friendly tool. It can be used for port scanning, packet sniffing, brute force attacks, and more.

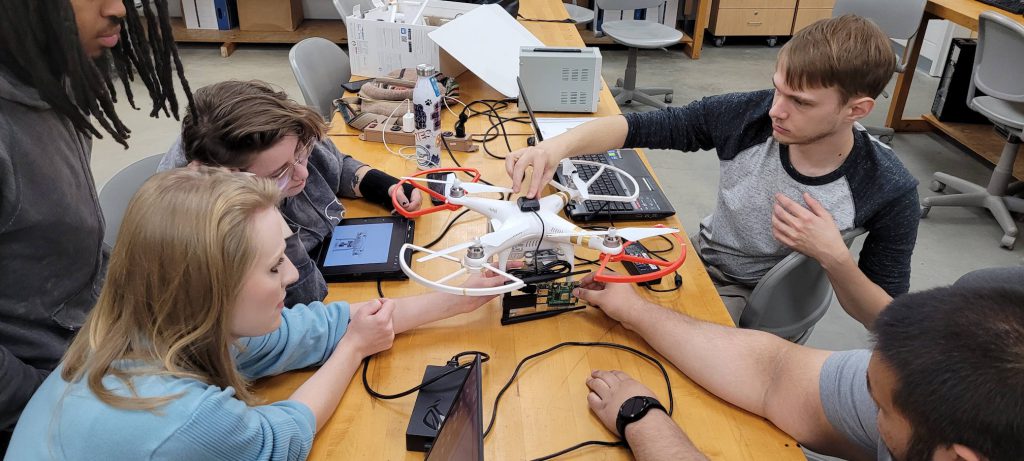

Background about this project:

The warflying drone was a portion of a larger capstone project required by Kent State for engineering students. Another element of the capstone project was creating a marketable product for the university. To achieve this secondary goal, the drone’s camera was live streamed via OBS to a text detection script in python, the code for which is available below.

CODE

import numpy as np

import cv2

from mss import mss

from pyzbar.pyzbar import decode

import pytesseract

import imutils

from PIL import Image

bounding_box = {'top': 200, 'left': 0, 'width': 640, 'height': 480}

sct = mss()

pytesseract.pytesseract.tesseract_cmd = r'C:\Program Files (x86)\Tesseract-OCR\tesseract.exe'

def cleantext(dirtytext=None):

newtext = ''

allowed_characters = ['A', 'B', 'C', 'D', 'E', 'F', 'G', 'H', 'I', 'J', 'K', 'L',

'M', 'N', 'O', 'P', 'Q', 'R', 'S', 'T', 'U', 'V', 'W', 'X', 'Y', 'Z', '1', '2', '3', '4', '5',

'6', '7', '8', '9', '0', '-', '\n']

for x in dirtytext:

if x in allowed_characters:

newtext += x

return newtext

while True:

img = sct.grab(bounding_box)

img = np.array(img)

for code in decode(img):

print(code.type)

print(code.data.decode('utf-8'))

cv2.imshow('screen', np.array(img))

key = cv2.waitKey(1) & 0xFF

if key == ord("s"):

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY) #convert to grey scale

gray = cv2.bilateralFilter(gray, 11, 17, 17) #Blur to reduce noise

edged = cv2.Canny(gray, 30, 200) #Perform Edge detection

cnts = cv2.findContours(edged.copy(), cv2.RETR_TREE,cv2.CHAIN_APPROX_SIMPLE)

cnts = imutils.grab_contours(cnts)

cnts = sorted(cnts, key = cv2.contourArea, reverse = True)[:10]

screenCnt = None

for c in cnts:

peri = cv2.arcLength(c, True)

approx = cv2.approxPolyDP(c, 0.018 * peri, True)

if len(approx) == 4:

screenCnt = approx

break

if screenCnt is None:

detected = 0

print ("No contour detected")

else:

detected = 1

if detected == 1:

cv2.drawContours(img, [screenCnt], -1, (0, 255, 0), 3)

mask = np.zeros(gray.shape,np.uint8)

new_image = cv2.drawContours(mask,[screenCnt],0,255,-1,)

new_image = cv2.bitwise_and(img,img,mask=mask)

(x, y) = np.where(mask == 255)

(topx, topy) = (np.min(x), np.min(y))

(bottomx, bottomy) = (np.max(x), np.max(y))

Cropped = gray[topx:bottomx+1, topy:bottomy+1]

text = pytesseract.image_to_string(Cropped, config='--psm 11')

text = cleantext(text)

print("Detected Number is:",text)

cv2.imshow("Frame", img)

cv2.imshow('Cropped',Cropped)

cv2.waitKey(0)

if key == ord("z"):

quit()The text detection script uses publicly available libraries to read license plate numbers by over saturating the video, using AI on the oversaturated image to detect letters, and then using programmatic filters to find strings that match conventional license plate formats.

QUICK LINKS: